Tell us about the browser wars grandpa!

After recently reading this blog post, I was reminiscing about the circuitous route that got me into web development. I’ve given a brief account of this before, but I thought it was worth recounting in detail, as it may be of interest to those looking to get into the field. I also just like to waffle about the olden days…

In 1997 I was newly arrived in Bristol, a recent-ish graduate in Environmental Science, with the compulsory couple of years post-uni experience travelling and doing random jobs, starting life in a new city and wondering what life had in store for me. I started by trying to forge a career in conservation, by juggling voluntary work at a small environmental organisation with a painful part-time job in a call centre to (barely) pay the bills. My job applications were getting me nowhere – although I had a degree, I had very little relevant work experience and I was lucky to receive a rejection letter, let alone an interview. The salt in the wound was getting turned down for a part-time minimum wage admin job at the organisation where I was volunteering (ironically the reason given was “lack of experience with databases”!).

At the time I hadn’t used the internet, I had seen people using it a couple of years previously in the computer lab when I was at university – a classmate showed me a terminal screen displaying a text web page with some jokes on it, and I was severely underwhelmed. So several years had passed with no inclination to use the internet, until someone (I wish I could remember who – I owe them a pint!), suggested to me that if I saved my CV out of MS word as HTML I could upload it to the internet and people might find it and offer me a job. Willing to try anything, after a bit of research (talking to people – remember i’d never been on the internet at this point) I took a trip to PC world and bought a dial-up modem, and picked up a freeserve CD-ROM. That evening I uploaded my HTML CV as the index page of my free webspace, and then surfed the internet for the first time.

Disappointed at the lack of job offers the next day, I bought a graphic design magazine design which came with a CD-ROM containing some HTML website templates. I hacked around with some of them using notepad, though it was all a bit confusing – framesets, javascript rollover images etc. but I was pleased that I was able to work out how to customise the pages and add my own content without needing any specialist software, so I proceeded to create a website for the environmental organisation I was volunteering for, along with a few other personal project sites. I bought a copy of “HTML for dummies” and learned how to create web sites from scratch. I also obsessively studied the HTML source of any site I came across – I wasn’t happy until I understood how it worked.

An interesting thing that happened a few weeks later is that one of my first emails was a complaint from a design agency. When they typed into Altavista the name of the environmental organisation they had just built a website for, the fugly site that I had built came up as number one, but their site didn’t show up at all. I took a look at their version, and when it (eventually) loaded, saw that they had created a “website” that consisted of a single jpeg. I hadn’t submitted my version to any search engines, but I had taken time to contact other environmental organisations who had websites and ask if they would add a link to my site. I did this purely because at the time I assumed you had to pay to get listed on a search engine, so people would only find my DIY site by surfing to it. At this point the penny dropped that although my experience amounted to a few evenings experimentation, I was already ahead of a professional design agency in my knowledge of how the internet works.

In 1998 I got a new temp admin job (ok, it was “receptionist”, but I like to gloss over that) in the computer science dept of a university, where I had access to relatively fast always-on internet. Even though it wasn’t part of my remit, I volunteered to help keep their department intranet up to date and spent most of my time teaching myself HTML and javascript while I pretended to work. I also started experimenting with “paint shop pro”, and voluntarily building websites for friends and colleagues. This job was a massive improvement over the call centre, but I was still pretty much on minumum wage and felt like I was going nowhere. Still at least I had a new hobby..

A few months in I took a life-changing phone call. A cold call from a recruitment agent is the bane of any receptionist’s life, but this one phoned the department asking if we’d stick a notice up asking for graduates with HTML knowledge as he a had a big client looking for several people to start ASAP. I obliged, but also, after some dilemma over whether I was good enough (scarred by constant job application rejection) emailed him a link to my personal website and CV, which now contained some inflated references to my web design experience. A week later I went for an interview. Two weeks later I was the director of a one-man-band ltd company, sitting on a train to Cardiff to start work as a contract “web designer” in a new department of BT.

I suddenly had a career – for the first time ever I was making an acceptable living, and for the first time I had colleagues to swap notes with. Someone taught me how to use layers in photoshop, someone else how to lay out web pages using tables and framesets. The “maverick” of the department demoed some experiments he had been doing with “Cascading Style Sheets”, which could be used on newer browsers such as IE4. We churned out hundreds of terrible, but improving websites giving small businesses their first web presence. We huddled together round a monitor confused and delighted as we stumbled across some of the first macromedia flash websites appearing on the web. HTML was dead we naively decided – we needed to learn flash fast or be become obsolete. Luckily, I was also introduced to using ASP and access databases to create dynamic web sites, and also started experimenting with PHP. The rest is on linked in.

The point i’m laboriously making is that although the techniques have moved on, i’m self-taught and i’m colleague-taught. There were no college courses back then that taught the skills needed because the technology was being constantly invented and reinvented. This isn’t just a history lesson, the technology is *still* being invented and the courses and tutors can’t keep up. The nearest we have now are the workshops run by industry professionals and conferences with talks by industry professionals. But, even then don’t think you’ll be learning anything that you can’t learn from someone’s blog, from studying the source code of their site (for front-end at least, try github for serverside code examples), or from someone you chat to on a mailing list, twitter, IRC channel or forum – the cutting edge layout techniques are being invented and experimented with right now by a fifteen year old in his/ her bedroom, months or years before they will appear in a workshop or conference.

I still have to teach myself new techniques or technologies every time I start a new project, and will continue to keep learning. Despite being in the game for over a decade, I still feel “grateful” that i’ve managed to find something I enjoy doing and can make a living from. I’m aware that I can’t become complacent – even though I don’t jump instantly on every new technique that gets shouted round the twitterverse, i’m aware that I need to keep up with anything pertinent or risk getting left behind. I also freely share my knowledge, via this blog, via twitter, via old-skool mailing lists and forums. I hope it is always this way – new techniques to keep things fresh and the best learning resources are not behind a pay-wall, allowing anyone with the determination and aptitude can pursue a career (or hobby) in web design and/or development.

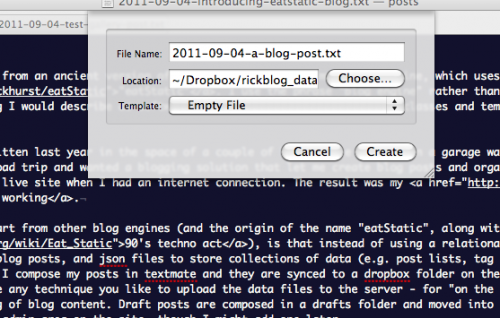

If you are looking to learn web development, the best you can do is find yourself some free web space and set up a personal sandbox site to experiment and display what you are working on. Set up a blog to document it all. My lucky break came in the early days learning HTML just as there was a “goldrush” for people with HTML knowledge – you may have to aim a bit higher now. The goldrushes are still happening though – right now it seems to be mobile website development, facebook app/page development (shudder) and Drupal development, but who knows what it will this time next year. The point is you will already need to have experience in what employers are looking for, and the person to teach it to you is none other than yourself.

Luckily this is a unique industry where knowledge is shared freely and we all learn from each other. Whilst it my implode into bickering sometimes, we have an active, talkative, web design and development community. If you specialise in a particular technique or technology there are whole thriving sub-communities dedicated to talking about it, having meet-ups and grassroots conferences, discussing it, day by day, hour by hour. Go and find them and get involved!